Py: Neural Net Classification#

This notebook was originally created by Hugh Miller for the Data Analytics Applications subject, as Exercise 5.18 - Spam detection with a neural network in the DAA M05 Classification and neural networks module.

Data Analytics Applications is a Fellowship Applications (Module 3) subject with the Actuaries Institute that aims to teach students how to apply a range of data analytics skills, such as neural networks, natural language processing, unsupervised learning and optimisation techniques, together with their professional judgement, to solve a variety of complex and challenging business problems. The business problems used as examples in this subject are drawn from a wide range of industries.

Find out more about the course here.

Purpose:#

This notebook fits a neural network on a spam dataset to identify spam emails based on features about the email.

References:#

The spam dataset is sourced from the University of California, Irvine Machine Learning Repository: Hopkins, M., Reeber, E., Forman, G., and Suermondt, J. (1999). Spambase Data Set [Dataset]. https://archive.ics.uci.edu/ml/datasets/Spambase.

This dataset contains the following:

4,601 observations, each representing an email originally collected from a Hewlett-Packard email server, of which 1,813 (39%) were identified as spam;

57 continuous features:

48 features of type ‘word_freq_WORD’ that represent the percentage (0 to 100) of words in the email that match ‘WORD’;

6 features of type ‘char_freq_CHAR’ that represent the percentage (0 to 100) of characters in the email that match ‘CHAR’;

1 feature, ‘capital_run_length_average’, that is the average length of uninterrupted sequences of capital letters in the email;

1 feature, ‘capital_run_length_longest’, that is the length of the longest uninterrupted sequence of capital letters in the email; and

1 feature, ‘capital_run_length_total’, that is the total number of capital letters in the email; and

a binary response variable that takes on a value 0 if the email is not spam and 1 if the email is spam.

Packages#

This section imports the packages that will be required for this exercise/case study.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

# Used to build the neural network and evaluate it.

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.preprocessing import OneHotEncoder

from tensorflow.keras import Sequential

from tensorflow.keras.layers import Dense

Data#

This section:

imports the data that will be used in the modelling; and

explores the data.

Import data#

# Create a list of headings for the data.

namearray = [

'word_freq_make',

'word_freq_address',

'word_freq_all',

'word_freq_3d',

'word_freq_our',

'word_freq_over',

'word_freq_remove',

'word_freq_internet',

'word_freq_order',

'word_freq_mail',

'word_freq_receive',

'word_freq_will',

'word_freq_people',

'word_freq_report',

'word_freq_addresses',

'word_freq_free',

'word_freq_business',

'word_freq_email',

'word_freq_you',

'word_freq_credit',

'word_freq_your',

'word_freq_font',

'word_freq_000',

'word_freq_money',

'word_freq_hp',

'word_freq_hpl',

'word_freq_george',

'word_freq_650',

'word_freq_lab',

'word_freq_labs',

'word_freq_telnet',

'word_freq_857',

'word_freq_data',

'word_freq_415',

'word_freq_85',

'word_freq_technology',

'word_freq_1999',

'word_freq_parts',

'word_freq_pm',

'word_freq_direct',

'word_freq_cs',

'word_freq_meeting',

'word_freq_original',

'word_freq_project',

'word_freq_re',

'word_freq_edu',

'word_freq_table',

'word_freq_conference',

'char_freq_;',

'char_freq_(',

'char_freq_[',

'char_freq_!',

'char_freq_$',

'char_freq_#',

'capital_run_length_average',

'capital_run_length_longest',

'capital_run_length_total',

'Spam_fl' ]

# Read in the data from the Stanford website.

spam = pd.read_csv("http://www.web.stanford.edu/~hastie/ElemStatLearn/datasets/spam.data", delim_whitespace=True,

header=None,

names=namearray

)

Explore data (EDA)#

# Check the dimensions of the data.

print(spam.info())

# Print the first 10 observations from the data.

print(spam.head(10))

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 4601 entries, 0 to 4600

Data columns (total 58 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 word_freq_make 4601 non-null float64

1 word_freq_address 4601 non-null float64

2 word_freq_all 4601 non-null float64

3 word_freq_3d 4601 non-null float64

4 word_freq_our 4601 non-null float64

5 word_freq_over 4601 non-null float64

6 word_freq_remove 4601 non-null float64

7 word_freq_internet 4601 non-null float64

8 word_freq_order 4601 non-null float64

9 word_freq_mail 4601 non-null float64

10 word_freq_receive 4601 non-null float64

11 word_freq_will 4601 non-null float64

12 word_freq_people 4601 non-null float64

13 word_freq_report 4601 non-null float64

14 word_freq_addresses 4601 non-null float64

15 word_freq_free 4601 non-null float64

16 word_freq_business 4601 non-null float64

17 word_freq_email 4601 non-null float64

18 word_freq_you 4601 non-null float64

19 word_freq_credit 4601 non-null float64

20 word_freq_your 4601 non-null float64

21 word_freq_font 4601 non-null float64

22 word_freq_000 4601 non-null float64

23 word_freq_money 4601 non-null float64

24 word_freq_hp 4601 non-null float64

25 word_freq_hpl 4601 non-null float64

26 word_freq_george 4601 non-null float64

27 word_freq_650 4601 non-null float64

28 word_freq_lab 4601 non-null float64

29 word_freq_labs 4601 non-null float64

30 word_freq_telnet 4601 non-null float64

31 word_freq_857 4601 non-null float64

32 word_freq_data 4601 non-null float64

33 word_freq_415 4601 non-null float64

34 word_freq_85 4601 non-null float64

35 word_freq_technology 4601 non-null float64

36 word_freq_1999 4601 non-null float64

37 word_freq_parts 4601 non-null float64

38 word_freq_pm 4601 non-null float64

39 word_freq_direct 4601 non-null float64

40 word_freq_cs 4601 non-null float64

41 word_freq_meeting 4601 non-null float64

42 word_freq_original 4601 non-null float64

43 word_freq_project 4601 non-null float64

44 word_freq_re 4601 non-null float64

45 word_freq_edu 4601 non-null float64

46 word_freq_table 4601 non-null float64

47 word_freq_conference 4601 non-null float64

48 char_freq_; 4601 non-null float64

49 char_freq_( 4601 non-null float64

50 char_freq_[ 4601 non-null float64

51 char_freq_! 4601 non-null float64

52 char_freq_$ 4601 non-null float64

53 char_freq_# 4601 non-null float64

54 capital_run_length_average 4601 non-null float64

55 capital_run_length_longest 4601 non-null int64

56 capital_run_length_total 4601 non-null int64

57 Spam_fl 4601 non-null int64

dtypes: float64(55), int64(3)

memory usage: 2.0 MB

None

word_freq_make word_freq_address word_freq_all word_freq_3d \

0 0.00 0.64 0.64 0.0

1 0.21 0.28 0.50 0.0

2 0.06 0.00 0.71 0.0

3 0.00 0.00 0.00 0.0

4 0.00 0.00 0.00 0.0

5 0.00 0.00 0.00 0.0

6 0.00 0.00 0.00 0.0

7 0.00 0.00 0.00 0.0

8 0.15 0.00 0.46 0.0

9 0.06 0.12 0.77 0.0

word_freq_our word_freq_over word_freq_remove word_freq_internet \

0 0.32 0.00 0.00 0.00

1 0.14 0.28 0.21 0.07

2 1.23 0.19 0.19 0.12

3 0.63 0.00 0.31 0.63

4 0.63 0.00 0.31 0.63

5 1.85 0.00 0.00 1.85

6 1.92 0.00 0.00 0.00

7 1.88 0.00 0.00 1.88

8 0.61 0.00 0.30 0.00

9 0.19 0.32 0.38 0.00

word_freq_order word_freq_mail ... char_freq_; char_freq_( \

0 0.00 0.00 ... 0.00 0.000

1 0.00 0.94 ... 0.00 0.132

2 0.64 0.25 ... 0.01 0.143

3 0.31 0.63 ... 0.00 0.137

4 0.31 0.63 ... 0.00 0.135

5 0.00 0.00 ... 0.00 0.223

6 0.00 0.64 ... 0.00 0.054

7 0.00 0.00 ... 0.00 0.206

8 0.92 0.76 ... 0.00 0.271

9 0.06 0.00 ... 0.04 0.030

char_freq_[ char_freq_! char_freq_$ char_freq_# \

0 0.0 0.778 0.000 0.000

1 0.0 0.372 0.180 0.048

2 0.0 0.276 0.184 0.010

3 0.0 0.137 0.000 0.000

4 0.0 0.135 0.000 0.000

5 0.0 0.000 0.000 0.000

6 0.0 0.164 0.054 0.000

7 0.0 0.000 0.000 0.000

8 0.0 0.181 0.203 0.022

9 0.0 0.244 0.081 0.000

capital_run_length_average capital_run_length_longest \

0 3.756 61

1 5.114 101

2 9.821 485

3 3.537 40

4 3.537 40

5 3.000 15

6 1.671 4

7 2.450 11

8 9.744 445

9 1.729 43

capital_run_length_total Spam_fl

0 278 1

1 1028 1

2 2259 1

3 191 1

4 191 1

5 54 1

6 112 1

7 49 1

8 1257 1

9 749 1

[10 rows x 58 columns]

Modelling#

This section:

fits a model; and

evaluates the fitted model.

Fit model#

# Build a neural network to classify an email as spam or non-spam.

# Change the pandas dataframe to numpy arrays containing the features (X) and response(Y).

X = spam.iloc[:,:-1].values # Drops the last column of the dataframe that contains the spam indicator (response).

Y = spam.iloc[:,57:58].values

# Standardise the data so that each feature has mean 0 and standard deviation 1.

sc = StandardScaler()

X = sc.fit_transform(X)

# Encode the response variable ('not spam' or 'spam') as 2 outcomes.

ohe = OneHotEncoder()

Y = ohe.fit_transform(Y).toarray()

# Split the data into train and test sets

X_train,X_test,Y_train,Y_test = train_test_split(X,Y,test_size = 0.25, random_state=42)

# This separates the data into training (75%) and test (25%) datasets.

# Build a neural network with two hidden layers, containing 28 hidden neurons

# (16 neurons in the first hidden layer and 12 neurons in the second hidden layer).

model = Sequential()

# Create a hidden layer with 16 neurons, an input dimension of 57,

# representing the features of the dataset and a ReLU activation function.

model.add(Dense(16, input_dim=57, activation="relu"))

# Create a second hidden layer with 12 neurons and a ReLU activation function.

model.add(Dense(12, activation="relu"))

# Create the output layer with 2 neurons representing 'spam' and 'not spam'.

model.add(Dense(2, activation="softmax"))

# Compile the model using the binary cross entropy loss function (logistic loss),

# the Adam optimiser (adaptive momentum estimation) which is an enhanced version

# of stochastic gradient descent (SGD), and capture the accuracy of model predictions.

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

# Fit the model.

history = model.fit(X_train, Y_train,validation_data = (X_test,Y_test), epochs=100, batch_size=64)

Epoch 1/100

54/54 [==============================] - 1s 7ms/step - loss: 0.7130 - accuracy: 0.5933 - val_loss: 0.6341 - val_accuracy: 0.7507

Epoch 2/100

54/54 [==============================] - 0s 4ms/step - loss: 0.5768 - accuracy: 0.8310 - val_loss: 0.4987 - val_accuracy: 0.8610

Epoch 3/100

54/54 [==============================] - 0s 7ms/step - loss: 0.4230 - accuracy: 0.8826 - val_loss: 0.3495 - val_accuracy: 0.8923

Epoch 4/100

54/54 [==============================] - 0s 3ms/step - loss: 0.3092 - accuracy: 0.8991 - val_loss: 0.2717 - val_accuracy: 0.9079

Epoch 5/100

54/54 [==============================] - 0s 3ms/step - loss: 0.2585 - accuracy: 0.9145 - val_loss: 0.2361 - val_accuracy: 0.9209

Epoch 6/100

54/54 [==============================] - 0s 4ms/step - loss: 0.2323 - accuracy: 0.9214 - val_loss: 0.2137 - val_accuracy: 0.9305

Epoch 7/100

54/54 [==============================] - 0s 2ms/step - loss: 0.2157 - accuracy: 0.9255 - val_loss: 0.1992 - val_accuracy: 0.9357

Epoch 8/100

54/54 [==============================] - 0s 4ms/step - loss: 0.2034 - accuracy: 0.9307 - val_loss: 0.1902 - val_accuracy: 0.9383

Epoch 9/100

54/54 [==============================] - 0s 3ms/step - loss: 0.1939 - accuracy: 0.9322 - val_loss: 0.1815 - val_accuracy: 0.9401

Epoch 10/100

54/54 [==============================] - 0s 3ms/step - loss: 0.1866 - accuracy: 0.9319 - val_loss: 0.1767 - val_accuracy: 0.9392

Epoch 11/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1812 - accuracy: 0.9339 - val_loss: 0.1717 - val_accuracy: 0.9444

Epoch 12/100

54/54 [==============================] - 0s 4ms/step - loss: 0.1761 - accuracy: 0.9345 - val_loss: 0.1690 - val_accuracy: 0.9470

Epoch 13/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1724 - accuracy: 0.9351 - val_loss: 0.1663 - val_accuracy: 0.9435

Epoch 14/100

54/54 [==============================] - 0s 2ms/step - loss: 0.1689 - accuracy: 0.9351 - val_loss: 0.1615 - val_accuracy: 0.9479

Epoch 15/100

54/54 [==============================] - 0s 3ms/step - loss: 0.1654 - accuracy: 0.9371 - val_loss: 0.1595 - val_accuracy: 0.9461

Epoch 16/100

54/54 [==============================] - 0s 3ms/step - loss: 0.1622 - accuracy: 0.9386 - val_loss: 0.1584 - val_accuracy: 0.9435

Epoch 17/100

54/54 [==============================] - 0s 2ms/step - loss: 0.1598 - accuracy: 0.9388 - val_loss: 0.1553 - val_accuracy: 0.9479

Epoch 18/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1563 - accuracy: 0.9414 - val_loss: 0.1556 - val_accuracy: 0.9435

Epoch 19/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1544 - accuracy: 0.9414 - val_loss: 0.1531 - val_accuracy: 0.9453

Epoch 20/100

54/54 [==============================] - 0s 3ms/step - loss: 0.1515 - accuracy: 0.9446 - val_loss: 0.1533 - val_accuracy: 0.9435

Epoch 21/100

54/54 [==============================] - 0s 3ms/step - loss: 0.1496 - accuracy: 0.9449 - val_loss: 0.1488 - val_accuracy: 0.9470

Epoch 22/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1474 - accuracy: 0.9455 - val_loss: 0.1482 - val_accuracy: 0.9470

Epoch 23/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1451 - accuracy: 0.9443 - val_loss: 0.1479 - val_accuracy: 0.9453

Epoch 24/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1436 - accuracy: 0.9455 - val_loss: 0.1465 - val_accuracy: 0.9461

Epoch 25/100

54/54 [==============================] - 0s 2ms/step - loss: 0.1416 - accuracy: 0.9475 - val_loss: 0.1435 - val_accuracy: 0.9479

Epoch 26/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1401 - accuracy: 0.9470 - val_loss: 0.1425 - val_accuracy: 0.9487

Epoch 27/100

54/54 [==============================] - 0s 2ms/step - loss: 0.1378 - accuracy: 0.9484 - val_loss: 0.1408 - val_accuracy: 0.9487

Epoch 28/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1363 - accuracy: 0.9478 - val_loss: 0.1440 - val_accuracy: 0.9479

Epoch 29/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1341 - accuracy: 0.9513 - val_loss: 0.1403 - val_accuracy: 0.9479

Epoch 30/100

54/54 [==============================] - 0s 2ms/step - loss: 0.1331 - accuracy: 0.9507 - val_loss: 0.1395 - val_accuracy: 0.9487

Epoch 31/100

54/54 [==============================] - 0s 2ms/step - loss: 0.1314 - accuracy: 0.9510 - val_loss: 0.1403 - val_accuracy: 0.9487

Epoch 32/100

54/54 [==============================] - 0s 2ms/step - loss: 0.1297 - accuracy: 0.9516 - val_loss: 0.1387 - val_accuracy: 0.9496

Epoch 33/100

54/54 [==============================] - 0s 2ms/step - loss: 0.1295 - accuracy: 0.9516 - val_loss: 0.1377 - val_accuracy: 0.9487

Epoch 34/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1275 - accuracy: 0.9519 - val_loss: 0.1364 - val_accuracy: 0.9496

Epoch 35/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1260 - accuracy: 0.9542 - val_loss: 0.1345 - val_accuracy: 0.9496

Epoch 36/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1244 - accuracy: 0.9536 - val_loss: 0.1356 - val_accuracy: 0.9496

Epoch 37/100

54/54 [==============================] - 1s 12ms/step - loss: 0.1233 - accuracy: 0.9542 - val_loss: 0.1349 - val_accuracy: 0.9513

Epoch 38/100

54/54 [==============================] - 0s 5ms/step - loss: 0.1219 - accuracy: 0.9542 - val_loss: 0.1333 - val_accuracy: 0.9505

Epoch 39/100

54/54 [==============================] - 0s 4ms/step - loss: 0.1203 - accuracy: 0.9559 - val_loss: 0.1379 - val_accuracy: 0.9479

Epoch 40/100

54/54 [==============================] - 0s 4ms/step - loss: 0.1189 - accuracy: 0.9568 - val_loss: 0.1332 - val_accuracy: 0.9505

Epoch 41/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1187 - accuracy: 0.9588 - val_loss: 0.1325 - val_accuracy: 0.9496

Epoch 42/100

54/54 [==============================] - 0s 2ms/step - loss: 0.1164 - accuracy: 0.9577 - val_loss: 0.1330 - val_accuracy: 0.9513

Epoch 43/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1154 - accuracy: 0.9586 - val_loss: 0.1329 - val_accuracy: 0.9522

Epoch 44/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1139 - accuracy: 0.9591 - val_loss: 0.1317 - val_accuracy: 0.9513

Epoch 45/100

54/54 [==============================] - 0s 2ms/step - loss: 0.1127 - accuracy: 0.9583 - val_loss: 0.1324 - val_accuracy: 0.9513

Epoch 46/100

54/54 [==============================] - 0s 2ms/step - loss: 0.1124 - accuracy: 0.9609 - val_loss: 0.1321 - val_accuracy: 0.9496

Epoch 47/100

54/54 [==============================] - 0s 2ms/step - loss: 0.1123 - accuracy: 0.9606 - val_loss: 0.1301 - val_accuracy: 0.9531

Epoch 48/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1110 - accuracy: 0.9586 - val_loss: 0.1324 - val_accuracy: 0.9496

Epoch 49/100

54/54 [==============================] - 0s 2ms/step - loss: 0.1090 - accuracy: 0.9606 - val_loss: 0.1317 - val_accuracy: 0.9531

Epoch 50/100

54/54 [==============================] - 0s 2ms/step - loss: 0.1086 - accuracy: 0.9617 - val_loss: 0.1333 - val_accuracy: 0.9496

Epoch 51/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1070 - accuracy: 0.9620 - val_loss: 0.1329 - val_accuracy: 0.9487

Epoch 52/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1060 - accuracy: 0.9620 - val_loss: 0.1339 - val_accuracy: 0.9487

Epoch 53/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1052 - accuracy: 0.9638 - val_loss: 0.1348 - val_accuracy: 0.9505

Epoch 54/100

54/54 [==============================] - 0s 3ms/step - loss: 0.1042 - accuracy: 0.9614 - val_loss: 0.1341 - val_accuracy: 0.9470

Epoch 55/100

54/54 [==============================] - 0s 4ms/step - loss: 0.1035 - accuracy: 0.9623 - val_loss: 0.1319 - val_accuracy: 0.9522

Epoch 56/100

54/54 [==============================] - 0s 4ms/step - loss: 0.1044 - accuracy: 0.9635 - val_loss: 0.1307 - val_accuracy: 0.9548

Epoch 57/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1011 - accuracy: 0.9646 - val_loss: 0.1331 - val_accuracy: 0.9505

Epoch 58/100

54/54 [==============================] - 0s 1ms/step - loss: 0.1010 - accuracy: 0.9629 - val_loss: 0.1317 - val_accuracy: 0.9522

Epoch 59/100

54/54 [==============================] - 0s 3ms/step - loss: 0.1000 - accuracy: 0.9641 - val_loss: 0.1302 - val_accuracy: 0.9522

Epoch 60/100

54/54 [==============================] - 0s 2ms/step - loss: 0.0989 - accuracy: 0.9649 - val_loss: 0.1312 - val_accuracy: 0.9513

Epoch 61/100

54/54 [==============================] - 0s 1ms/step - loss: 0.0982 - accuracy: 0.9661 - val_loss: 0.1321 - val_accuracy: 0.9522

Epoch 62/100

54/54 [==============================] - 0s 1ms/step - loss: 0.0975 - accuracy: 0.9652 - val_loss: 0.1326 - val_accuracy: 0.9513

Epoch 63/100

54/54 [==============================] - 0s 1ms/step - loss: 0.0968 - accuracy: 0.9661 - val_loss: 0.1310 - val_accuracy: 0.9531

Epoch 64/100

54/54 [==============================] - 0s 1ms/step - loss: 0.0967 - accuracy: 0.9661 - val_loss: 0.1318 - val_accuracy: 0.9513

Epoch 65/100

54/54 [==============================] - 0s 1ms/step - loss: 0.0959 - accuracy: 0.9664 - val_loss: 0.1344 - val_accuracy: 0.9487

Epoch 66/100

54/54 [==============================] - 0s 1ms/step - loss: 0.0958 - accuracy: 0.9658 - val_loss: 0.1338 - val_accuracy: 0.9496

Epoch 67/100

54/54 [==============================] - 0s 1ms/step - loss: 0.0944 - accuracy: 0.9661 - val_loss: 0.1346 - val_accuracy: 0.9487

Epoch 68/100

54/54 [==============================] - 0s 1ms/step - loss: 0.0932 - accuracy: 0.9670 - val_loss: 0.1339 - val_accuracy: 0.9487

Epoch 69/100

54/54 [==============================] - 0s 1ms/step - loss: 0.0929 - accuracy: 0.9670 - val_loss: 0.1305 - val_accuracy: 0.9531

Epoch 70/100

54/54 [==============================] - 0s 1ms/step - loss: 0.0922 - accuracy: 0.9658 - val_loss: 0.1337 - val_accuracy: 0.9487

Epoch 71/100

54/54 [==============================] - 0s 1ms/step - loss: 0.0917 - accuracy: 0.9675 - val_loss: 0.1312 - val_accuracy: 0.9522

Epoch 72/100

54/54 [==============================] - 0s 2ms/step - loss: 0.0909 - accuracy: 0.9672 - val_loss: 0.1345 - val_accuracy: 0.9496

Epoch 73/100

54/54 [==============================] - 0s 5ms/step - loss: 0.0902 - accuracy: 0.9672 - val_loss: 0.1350 - val_accuracy: 0.9505

Epoch 74/100

54/54 [==============================] - 0s 3ms/step - loss: 0.0896 - accuracy: 0.9672 - val_loss: 0.1337 - val_accuracy: 0.9513

Epoch 75/100

54/54 [==============================] - 0s 1ms/step - loss: 0.0886 - accuracy: 0.9672 - val_loss: 0.1334 - val_accuracy: 0.9540

Epoch 76/100

54/54 [==============================] - 0s 3ms/step - loss: 0.0882 - accuracy: 0.9687 - val_loss: 0.1370 - val_accuracy: 0.9487

Epoch 77/100

54/54 [==============================] - 0s 1ms/step - loss: 0.0880 - accuracy: 0.9690 - val_loss: 0.1359 - val_accuracy: 0.9522

Epoch 78/100

54/54 [==============================] - 0s 2ms/step - loss: 0.0873 - accuracy: 0.9678 - val_loss: 0.1387 - val_accuracy: 0.9479

Epoch 79/100

54/54 [==============================] - 0s 3ms/step - loss: 0.0873 - accuracy: 0.9690 - val_loss: 0.1340 - val_accuracy: 0.9522

Epoch 80/100

54/54 [==============================] - 0s 3ms/step - loss: 0.0859 - accuracy: 0.9681 - val_loss: 0.1334 - val_accuracy: 0.9540

Epoch 81/100

54/54 [==============================] - 0s 3ms/step - loss: 0.0856 - accuracy: 0.9696 - val_loss: 0.1374 - val_accuracy: 0.9505

Epoch 82/100

54/54 [==============================] - 0s 4ms/step - loss: 0.0855 - accuracy: 0.9704 - val_loss: 0.1357 - val_accuracy: 0.9522

Epoch 83/100

54/54 [==============================] - 0s 2ms/step - loss: 0.0848 - accuracy: 0.9696 - val_loss: 0.1343 - val_accuracy: 0.9531

Epoch 84/100

54/54 [==============================] - 0s 3ms/step - loss: 0.0835 - accuracy: 0.9699 - val_loss: 0.1380 - val_accuracy: 0.9522

Epoch 85/100

54/54 [==============================] - 0s 5ms/step - loss: 0.0830 - accuracy: 0.9699 - val_loss: 0.1358 - val_accuracy: 0.9540

Epoch 86/100

54/54 [==============================] - 0s 3ms/step - loss: 0.0831 - accuracy: 0.9699 - val_loss: 0.1377 - val_accuracy: 0.9513

Epoch 87/100

54/54 [==============================] - 0s 1ms/step - loss: 0.0817 - accuracy: 0.9710 - val_loss: 0.1369 - val_accuracy: 0.9522

Epoch 88/100

54/54 [==============================] - 0s 2ms/step - loss: 0.0810 - accuracy: 0.9722 - val_loss: 0.1383 - val_accuracy: 0.9557

Epoch 89/100

54/54 [==============================] - 0s 5ms/step - loss: 0.0815 - accuracy: 0.9704 - val_loss: 0.1362 - val_accuracy: 0.9548

Epoch 90/100

54/54 [==============================] - 0s 2ms/step - loss: 0.0801 - accuracy: 0.9722 - val_loss: 0.1407 - val_accuracy: 0.9522

Epoch 91/100

54/54 [==============================] - 0s 4ms/step - loss: 0.0798 - accuracy: 0.9716 - val_loss: 0.1417 - val_accuracy: 0.9513: 0s - loss: 0.0746 - accuracy: 0.97

Epoch 92/100

54/54 [==============================] - 0s 2ms/step - loss: 0.0796 - accuracy: 0.9707 - val_loss: 0.1374 - val_accuracy: 0.9540

Epoch 93/100

54/54 [==============================] - 0s 2ms/step - loss: 0.0786 - accuracy: 0.9716 - val_loss: 0.1398 - val_accuracy: 0.9548

Epoch 94/100

54/54 [==============================] - 0s 3ms/step - loss: 0.0780 - accuracy: 0.9722 - val_loss: 0.1422 - val_accuracy: 0.9513

Epoch 95/100

54/54 [==============================] - 0s 4ms/step - loss: 0.0776 - accuracy: 0.9730 - val_loss: 0.1419 - val_accuracy: 0.9531

Epoch 96/100

54/54 [==============================] - 0s 3ms/step - loss: 0.0770 - accuracy: 0.9736 - val_loss: 0.1437 - val_accuracy: 0.9522

Epoch 97/100

54/54 [==============================] - 0s 1ms/step - loss: 0.0763 - accuracy: 0.9742 - val_loss: 0.1409 - val_accuracy: 0.9548

Epoch 98/100

54/54 [==============================] - 0s 1ms/step - loss: 0.0762 - accuracy: 0.9730 - val_loss: 0.1415 - val_accuracy: 0.9566

Epoch 99/100

54/54 [==============================] - 0s 2ms/step - loss: 0.0766 - accuracy: 0.9736 - val_loss: 0.1462 - val_accuracy: 0.9505

Epoch 100/100

54/54 [==============================] - 0s 2ms/step - loss: 0.0758 - accuracy: 0.9757 - val_loss: 0.1436 - val_accuracy: 0.9557

Evaluate model#

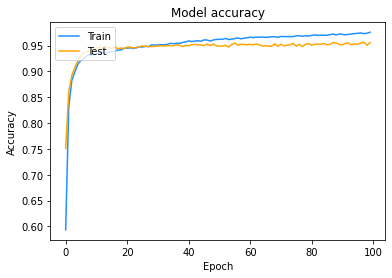

# Plot the accuracy of the fitted model on the training and test data.

plt.plot(history.history['accuracy'], color='dodgerblue')

plt.plot(history.history['val_accuracy'],color='orange')

plt.title('Model accuracy')

plt.ylabel('Accuracy')

plt.xlabel('Epoch')

plt.legend(['Train', 'Test'], loc='upper left')

plt.show()

The plot of the model’s accuracy against the number of epochs suggests that accuracy on the test data does not improve much beyond approximately 10 epochs (cycles through the full dataset).